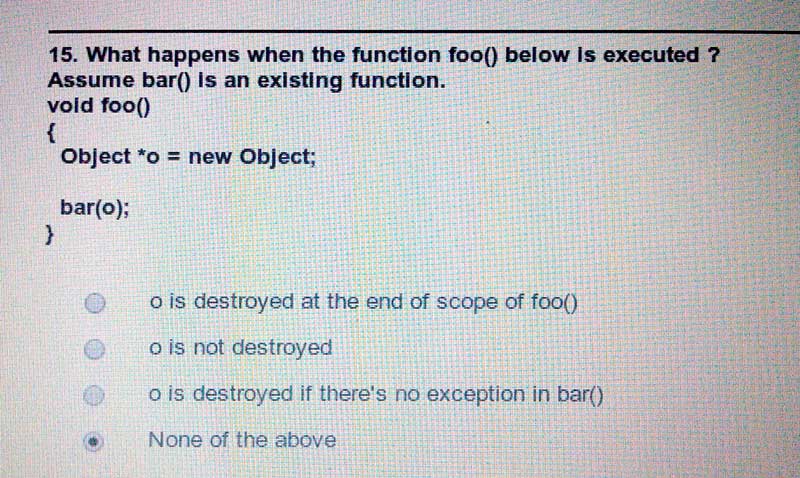

I saw a question online recently which basically asked whether big-O notation always holds true in practice. For example, in theory, searching through a vector for an item should be O(n) whereas searching a map should be O(log n). Right?

Let’s imagine we create a vector with 100,000 random integers in it. We then sort the vector, and run two different searches on it. The first search starts at the beginning, compares the element against the value we’re searching for, and simply keeps incrementing until we find it. A naive search you might say. Without resorting to std:: algorithms, how might you improve it?

A worthy idea might be a recursive binary, or bisection, search. We might, for example, make 15 bisections to find a value in the upper quadrant of our range, compared to, say 75,000 comparisons in the brute force approach.

That’s fairly safe to say that the binary search is going to be much faster, right?

Not necessarily. See the results below:

100000 random numbers generated, inserted, and sorted in a vector:

0.018477s wall, 0.010000s user + 0.000000s system = 0.010000s CPU (54.1%)

Linear search iterations: = 75086, time taken:

0.000734s wall, 0.000000s user + 0.000000s system = 0.000000s CPU (n/a%)

Binary search iterations: 17, time taken:

0.001327s wall, 0.000000s user + 0.000000s system = 0.000000s CPU (n/a%)

Are you surprised to see that the binary search took (very) slightly longer than the brute force approach?

Welcome to the world of modern processors. What’s going on there is a healthy dose of branch prediction and plenty of valid processor cache hits which actually makes the brute force approach viable.

I guess the moral of this story is, as always, don’t optimise prematurely – because you might actually be making things worse! Always profile your hot spots and work empirically rather than on what you think you know about algorithm efficiency.

The code used here is reproduced below – it should compile on VS 2015, clang and g++ without issue. You’ll need Boost for the timers. You may see wildly different timings depending on optimisation levels and other factors :)

#include <iostream>

#include <vector>

#include <random>

#include <algorithm>

#include <string>

#include "boost/timer/timer.hpp"

const int MAX_VAL = 100'000;

template <typename T>

T LinearSearch(typename std::vector<T>::iterator begin,

typename std::vector<T>::iterator end, T value)

{

// While not ostensibly efficient, branch prediction and CPU cache for the

// contiguous vector data should make this go like greased lightning :)

size_t counter{0};

while (begin < end)

{

if (*begin >= value)

{

std::cout << "\nLinear search iterations: = " << counter;

return *begin;

}

++begin;

++counter;

}

return 0;

}

template <typename T>

T BinarySearch(typename std::vector<T>::iterator begin,

typename std::vector<T>::iterator end, T value)

{

// The return value is not exact... just interested in getting close to the

// value.

static size_t counter{0};

size_t mid = (end - begin) / 2;

++counter;

if (begin >= end)

{

std::cout << "\nBinary search iterations: " << counter;

return *begin;

}

if (*(begin + mid) < value)

{

return BinarySearch(begin + mid + 1, end, value);

}

else if (*(begin + mid) > value)

{

return BinarySearch(begin, begin + mid - 1, value);

}

else

{

std::cout << "\nBinary search iterations: " << counter;

return value;

}

}

int main()

{

// Fill a vector with MAX_VAL random numbers between 0 - MAX_VAL

std::vector<unsigned int> vec;

vec.reserve(MAX_VAL);

{

boost::timer::auto_cpu_timer tm;

std::random_device randomDevice;

std::default_random_engine randomEngine(randomDevice());

std::uniform_int_distribution<unsigned int> uniform_dist(0, MAX_VAL);

for (int n = 0; n < MAX_VAL; ++n)

{

vec.emplace_back(uniform_dist(randomEngine));

}

// Sort the vector

std::sort(vec.begin(), vec.end());

std::cout << MAX_VAL

<< " random numbers generated, inserted, and sorted"

" in a vector:" << std::endl;

}

{

boost::timer::auto_cpu_timer tm2;

LinearSearch(

vec.begin(), vec.end(), static_cast<unsigned int>(MAX_VAL * 0.75));

std::cout << ", time taken:" << std::endl;

}

{

boost::timer::auto_cpu_timer tm2;

BinarySearch(vec.begin(), vec.end() - 1,

static_cast<unsigned int>(MAX_VAL * 0.75));

std::cout << ", time taken:" << std::endl;

}

return 0;

}